As online platforms grow, the need to maintain healthy interactions and safeguard the user experience becomes more needed than ever. Detecting toxic content, such as texts that are offensive or mention terms and topics that can harm online discourse, is crucial not only for maintaining community standards but also for protecting your monetization potential as a business, site owner, or individual.

In this guide, we will explore how you can leverage the powerful features of Google’s Natural Language API to automatically scan and identify problematic text directly in Google Sheets. This integration can help you manage large volumes of user-generated or third-party-generated content efficiently, ensuring that your platform remains a safe and welcoming space for all users.

Regardless of your role or the content you aim to review, this tutorial will provide you with the tools and knowledge needed to implement an effective content moderation system, all without needing to write a single line of code.

About the method: How does toxicity detection for content moderation in NLP work?

Toxicity detection in natural language processing (NLP) is a critical technology used to identify and mitigate harmful or offensive content in text, allowing for organisations or individuals dealing with content to automate with the help of machine learning APIs the task of content moderation.

Content moderation in natural language processing (NLP) is typically treated as a classification problem, which is a subcategory of supervised machine learning. In this context, the goal is to categorize text into predefined classes based on whether the content is toxic or not.

When training the PaLM 2 model, for a subset of the pre-training data, Google’s team incorporated special control tokens to denote text toxicity, utilizing feedback from a stable release of the Perspective API – a free API that uses machine learning to identify “toxic” comments, across multiple categories to make it easier to host better conversations online. This advancement, combined with the extended capabilities of PaLM 2 have enabled its improved capabilities to detect different categories of toxic text written in different languages.

About the model: Google Cloud’s Natural Language API

The Natural Language API is a versatile API that draws from a vast library filled with knowledge about language structure, grammar structure, sentiment, and real world entities. It’s trained on massive amounts of text data, allowing it to:

- Understand context and entities: It goes beyond individual words, considering the surrounding text and even real-world knowledge to grasp the meaning of text via entity analysis.

- Detect sentiment: It can sense emotions like joy, anger, or sadness expressed in the text, not only at a document level (the entire text) but also at the entity level (sentiment associated with a specific entity mentioned in the text).

- Classify text: It has pre-training applied to identify whether the text you analyze aligns with either of more than 1,300+ categories

- Syntax Analysis: It can parse the text you provide, breaking it down into its syntactic components to reveal the grammatical structure and relationships between different parts of the sentence.

Check out the additional tutorials on our website, published about this API:

How to do Syntax Analysis with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Sentiment Analysis with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Entity Extraction with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Text Classification with Google’s Natural Language API in Google Sheets (Apps Script)

How Google Cloud’s Natural Language API text moderation module works

Google Cloud’s Natural Language API module for Text Moderation uses Google’s PaLM 2 foundation model to identify a wide range of harmful content, including hate speech, bullying, and sexual harassment.

Text moderation models analyse a document (text that you provide, could be a content piece, a title, a paragraph, or user comment) against a list of safety attributes, which include “harmful categories” and topics that may be considered sensitive.

The model then provides you with an output that’s comprised of two things – the safety attribute and its confidence of the label.

There are 16 categories of the safety attribute labels including: Toxic, Derogatory, Violent, Sexual, Insult, Profanity, Death, Harm & Tragedy, Firearms & Weapons, Public Safety, Health, Religion & Belief, Illicit Drugs, War & Conflict, Finance, Politics, and Legal.

Each safety attribute has an associated confidence score between 0.00 and 1.00, reflecting the likelihood of the input or response belonging to a given category.

Additional resources on content moderation

Check out the additional resources by Google Cloud to practice working with this API content moderation module:

- Code lab for content moderation in Python for the Perspective API for Google Cloud

- Text moderation with Natural Language API in Python

- Content moderation module documentation

Step-by-step guide on using the Natural Language API Text Moderation Module in Google Sheets via Apps Script

Prerequisites

- You have a project set-up in Google Cloud – see how to create a project in Google Cloud

- For this project, you have enabled the Google Cloud’s Natural Language API – see how to enable a Google Cloud API

- You have billing enabled for your project – see how to enable billing for your project

Get your API key

Having selected your Google Cloud project, navigate to the APIs and Services menu > Credentials.

Then, click on the Create Credentials button from the navigation next to the page title, then select API Key from the drop-down menu.

This is the easiest to use, but least secure method of authentication – you might consider alternatives for more complex projects.

What is the difference between API, OAuth client ID and Service account authentication?

In short, API key authentication is like a Public key for basic access (like a library card), OAuth client ID allows for more user-specific access requiring authorization (like a bank card with PIN), while Service account authentication is the most secure access for applications without users (like a company credit card).

Once you click on the Create API key button, there will be a pop-up menu that will indicate that the API key is being created, after which it will appear on the screen for you to copy.

You can always navigate back to this section of your project, and reveal the API key at a later stage, using the Show Key button. If you ever need to edit or delete the API key, you can do so from the drop-down menu.

Extract and organise the text you want to analyse

The next step is to decide on and organise the content you want to analyse into Google Sheets. The Google Cloud Natural Language API can analyse syntax in both short snippets of text and longer documents.

So, given this information what you need to do is to determine the needs for moderation on different types of content, the platform where the content exists, who it’s generated by, and how you can get it.

For the purposes of the demo today, I’ve scraped a small sample size content dataset from different Reddit subreddits. The output file of this approach looks like this – simple structure with the author of the post and the content of their Reddit post.

Note: I’ve not included the subreddits that I have used in the file, as I don’t want to promote any of them, but when doing this outside of demo purposes, and if you are combining multiple data sources, I suggest you do, to keep your dataset more neat and ensure you have proper source tracking.

Once you have your content organised into a spreadsheet-suitable format, you can move on to the next step.

Make a copy of the Google Sheets Template and paste your content and API key

To prepare the data for analysis, we need to do two things – organize the content for analysis, and paste the API key in the script.

Paste your API key

In Google Sheets, open the Extensions menu, and click on Apps Script.

Open the TextModeration.gs script attached, and select the text that says enterAPIkey. Replace it with your Google Cloud API project key. Then click on the disk icon to Save, and return to the Google Sheet file.

Replace the content in the Working Sheet with your own

Navigate to the Working Sheet and replace the Content identifier and Content for analysis in the first two columns.

The script maps the model’s confidence score to the labels, so don’t change the column names, as they correspond to the classification labels.

Run the analysis to analyse the content and identify any toxic texts

To run the analysis, navigate to the first column with the label (in the template that’s column C), then past the formula below, referencing each of the text you wish to analyse.

=transpose(MODERATETEXT({replace with your cell, e.g. B2}))Once you enter the formula, drag it down the column to analyse the other texts in your dataset.

You might need to enable the permissions for the Apps Script to run before it runs. Click on “Go to Content Moderation with Google Natural language API (unsafe)“, then click “Allow”. Once you’ve authorised the script, the results should populate automatically.

To avoid making repeated calls to the API, copy the results and Paste values only.

To better understand the scores and to prepare them for visualisation, you can turn them into percentages.

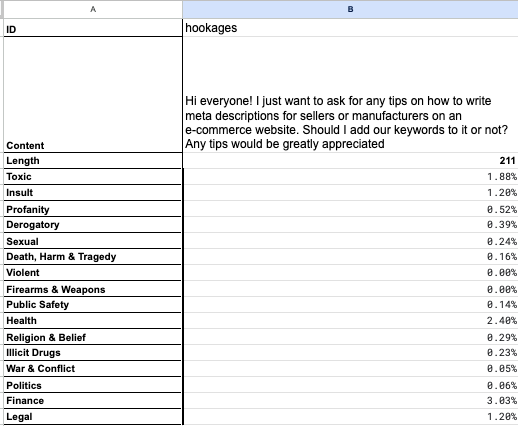

Remember, the score returned is the confidence score of the model that the text contains any of the categories of harmful texts. To explain, we can see that the following text does not score high in any of the categories, so we can determine with certainty it’s non-harmful.

For another text in our dataset, we can see the opposite is true.

You can also add a conditional formatting to your file to quickly identify patterns in your analysed dataset.

Let’s move on to understanding the data through visualisation.

Visualise the content moderation data (optional)

While an optional step, it’s beneficial to have some data visualisation in place, especially when presenting a report to third-party stakeholders.

Here are just a few ideas on must-have data visualisations to help this data come through in a report:

- Heat map of toxicity categories: Create a heat map to show the frequency of each toxicity category across all entries. This can help quickly identify which types of toxicity are most prevalent.

- Bar charts for each category: Use bar charts to compare the number of entries classified under each toxicity category. You can also create stacked bar charts to show the proportion of each category by different authors/sources.

- Word clouds: Generate word clouds for entries within each category to visualise the most commonly used words in contexts deemed toxic, profane, etc. This helps in identifying common language patterns within toxic entries.

- Interactive dashboard: Build an interactive dashboard where users can filter by author/source, toxicity category, or content length to explore specific aspects of the data. This could include tools for deeper analysis, like sentiment scoring or keyword extraction.

You can also run your texts through additional analyses like sentiment analysis and entity analysis, and blend the results with the content moderation categories. That way, you can demonstrate a correlation between the mention of certain entities in the next, and the overall toxicity of the comments or a correlation between the tone of the comment and different categories of texts.

Why use Google’s Natural Language API content moderation in Google Sheets

Using Google’s Natural Language API for content moderation in Google Sheets can be very beneficial for businesses or individuals who manage large amounts of text. This includes social media managers, community managers, forum moderators, or website administrators, who receive a ton of comments per day. Here are some of the benefits of moderating text:

- Automated Content Filtering for toxic content: The API automatically analyzes text within Google Sheets for inappropriate or undesirable content, helping you to maintain a clean and professional data set without manually reviewing each entry.

- The process is beginner-friendly and efficient for small to medium-sized datasets. Google’s Natural Language API text moderation module processes large volumes of text quickly, saving time and reducing the workload on human moderators. The template allows you to get started even without coding skills.

- Scalability can be achieved for bigger datasets by using the API in Python

- Integration with other modules: This API alone has multiple modules, including entity analysis, sentiment analysis, text classification, and syntax analysis. Meaning – not only can you automate the moderation process but also have additional insight on the texts’ sentiment and entities, to allow for more informed data analysis.

- Robust data management: By flagging content based on the content moderation analysis, you can better manage your data. For instance, you could automatically sort posts in your community based on topics and see whether they align with your code of conduct or not. For another example, you can detect publications on your website that reference finance or legal matters to run though a more thorough editorial process.

This integration effectively enhances the functionality of Google Sheets by adding a layer of advanced text analysis, which is particularly valuable in contexts where content quality and insight extraction are critical.

Learn how to incorporate content moderation into SEO strategy

Incorporating content moderation into your organic strategy online can help you maintain not only a user-friendly website or community, but also a reputable brand. Effective content moderation ensures that all content aligns with quality guidelines and provides value to users, which can help improve online performance.

Here’s just a few ideas on projects, where you can integrate content moderation into your organic strategy:

- monitor social media mentions for toxic comments to maintain a brand toxicity score – reduce negative impacts on brand reputation and SEO by identifying and addressing harmful content. This can be applied not only for your brand but also for competitors, or potential influencers or brand partnerships.

- monitor comments on your website to see whether they align with your content guidelines – ensure all user-generated content adheres to quality and relevance standards.

- monitor community posts to ensure they follow the code of conduct – run posts in your forum or community through content moderation analysis to ensure that the conversations maintain a positive and respectful tone that helps user engagement, and that is aligned with your code of conduct

- run your web content through content moderation algorithms to determine whether it follows a good tone of voice and other best practices in terms of online content safety

By integrating content moderation into your organic strategy, you create a more trustworthy and engaging online presence that is likely to perform better in search engine rankings

See what else you can do with to this API

As mentioned earlier, Google’s Natural Language API has several additional capabilities that include text classification, entity analysis (which also includes entity sentiment analysis), sentiment analysis, and syntax analysis.

Explore other step-by-step guides for this API by visiting the tutorials, linked below:

How to do Syntax Analysis with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Sentiment Analysis with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Entity Extraction with Google’s Natural Language API in Google Sheets (Apps Script)

How to do Text Classification with Google’s Natural Language API in Google Sheets (Apps Script)

Or go ahead and grab any of the free Google Sheets templates that feature this API:

Lazarina Stoy is a Digital Marketing Consultant with expertise in SEO, Machine Learning, and Data Science, and the founder of MLforSEO. Lazarina’s expertise lies in integrating marketing and technology to improve organic visibility strategies and implement process automation.

A University of Strathclyde alumna, her work spans across sectors like B2B, SaaS, and big tech, with notable projects for AWS, Extreme Networks, neo4j, Skyscanner, and other enterprises.

Lazarina champions marketing automation, by creating resources for SEO professionals and speaking at industry events globally on the significance of automation and machine learning in digital marketing. Her contributions to the field are recognized in publications like Search Engine Land, Wix, and Moz, to name a few.

As a mentor on GrowthMentor and a guest lecturer at the University of Strathclyde, Lazarina dedicates her efforts to education and empowerment within the industry.